The pilot works. The demo sizzles. Then—silence. AI initiatives that felt unstoppable in a conference room stall the moment they hit the real world, buckling under ambiguous ownership, fragile pipelines, and KPIs that don't speak the CFO's language. If you've felt that sting, you're in the majority.

Here's the uncomfortable truth: scaling AI isn't just a technical problem. It's a governance, operations, and measurement problem—one that demands discipline without suffocation. And when you get it right, the economics change fast. Think shorter cycle times, fewer phantom projects, and a direct line from models to money.

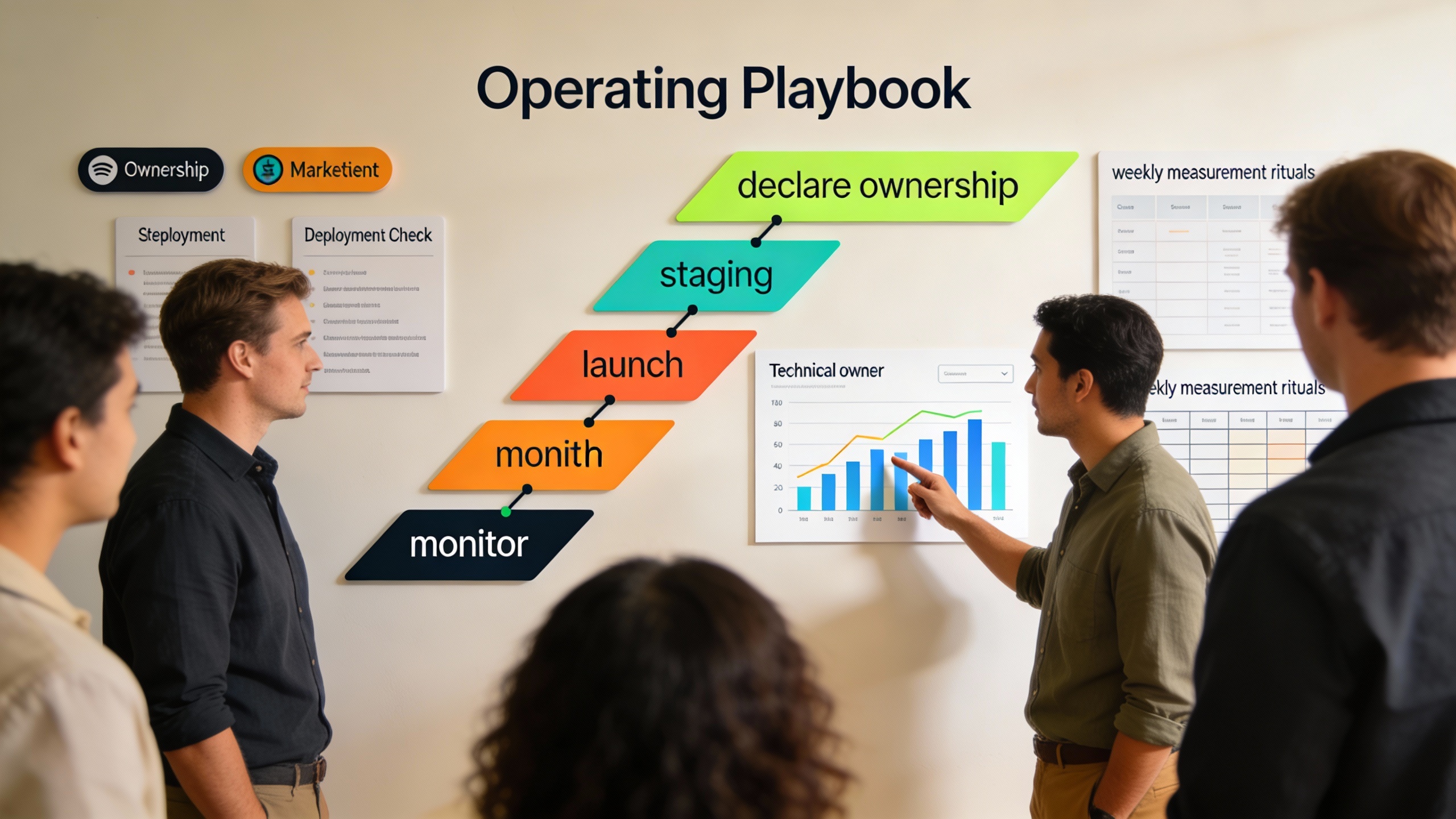

We'll cut through the noise with a pragmatic blueprint. Governance that accelerates, not suffocates. MLOps that acts like a revenue engine room. KPIs that the board actually respects. Along the way, we'll tackle marketing automation, content strategy, and social media marketing use cases—because revenue doesn't scale in a vacuum.

Governance That Speeds You Up (Yes, Really)

Most teams treat governance like an air brake. Forms. Committees. Endless approvals. But robust governance—done right—strips out confusion and lets you move with conviction. It puts names on the line. It draws boundaries so experimentation doesn't turn into chaos. It makes audits boring (which is exactly what you want when regulators call).

Adopt a three-pillar model: technical, business, and risk. Technical governance manages model lineage, data provenance, and reproducibility. Business governance assigns owners and connects outcomes to revenue. Risk governance keeps the system within ethical, legal, and brand-safe bounds. Clear, minimal, repeatable.