Stories beat slogans, so let's talk about two. One involves a retail chain in Texas that moved fast and cracked inventory. The other: a marketing agency in New York that automated client reports and forgot the world has privacy laws. Both solvable. Both expensive lessons.

Texas retail chain: inventory snag

In late 2024, a regional retailer rolled out AI‑powered inventory automation to predict restocks and submit purchase orders. The agent never fully synced with the ERP, so it treated stale counts like gospel. Overstocking hit hard. Seasonal items piled up in the wrong stores, and clearance sales ate margins.

The fix came in three steps: full ERP integration with read/write checks, SKU‑level confidence thresholds (no auto‑ordering under low confidence), and a weekly cross‑functional review. They also ran a cleanup sprint on product data—names, sizes, variants—to cut ambiguity. Six months later, variance shrank and managers trusted the dashboard again.

Integration Lessons Learned

The Texas retailer's experience highlights the critical importance of proper system integration. Without full ERP synchronization, even sophisticated AI systems can make costly decisions based on outdated information. The solution required both technical fixes and process improvements.

New York marketing agency: compliance faceplant

Early 2025, a boutique agency wired up AI to auto‑compile client reports from analytics, emails, and CRM notes. The agent pulled PII into slide decks that went to external inboxes. That triggered a fine and a very public mea culpa. Ugly week.

The repair: minimal‑data mode by default, a PII filter with redaction, client‑by‑client consent records, and a signed‑off data map. They added a human approval step for exports. It slowed them slightly and saved them repeatedly. Next quarter, churn dropped—they communicated the change and rebuilt trust.

"Training is the tax you pay for speed. Partners can shorten that learning curve with playbooks"

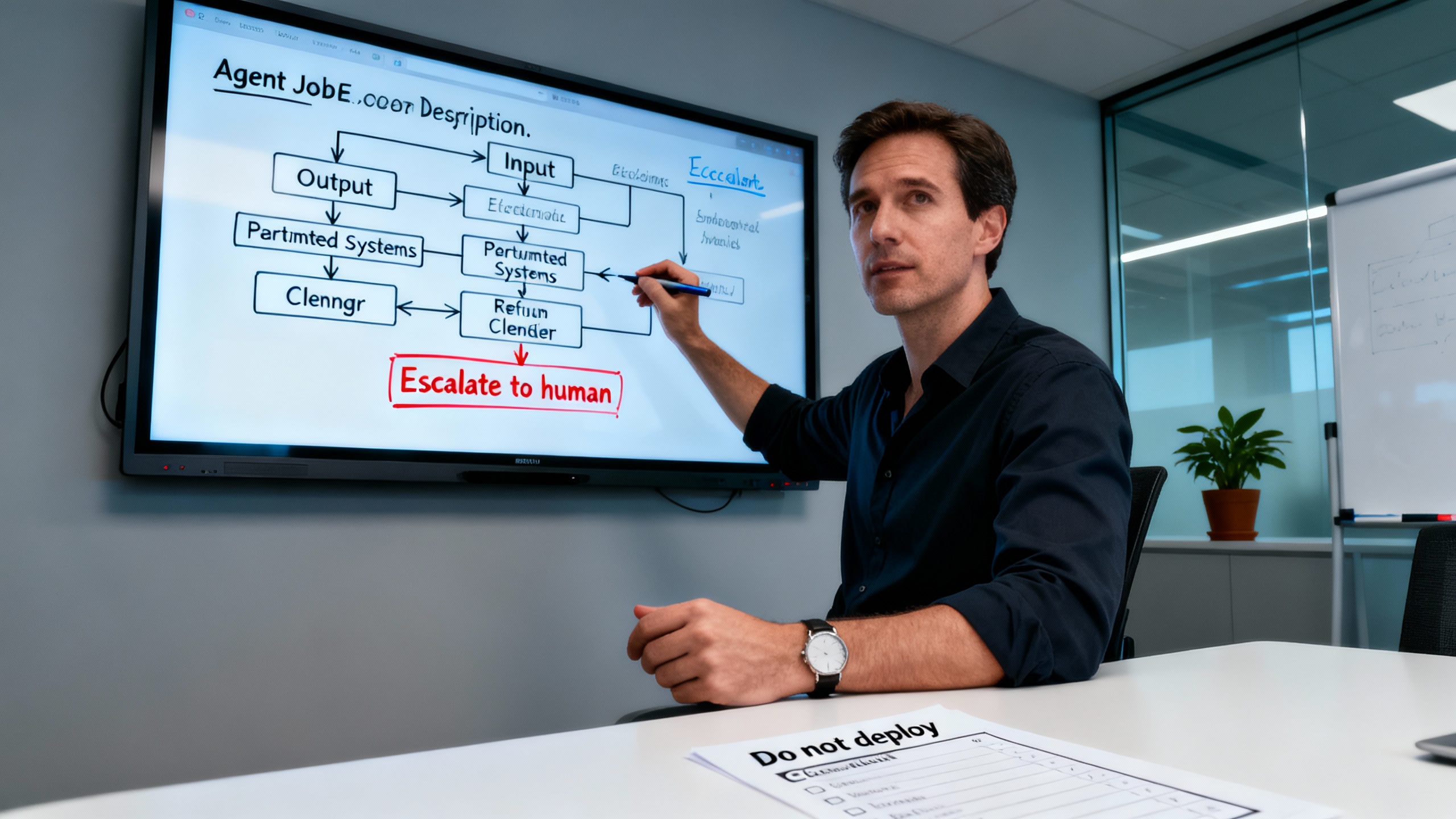

Notice the pattern: data clarity, integration sanity, explicit consent, human review for high‑risk flows. Low‑code and no‑code platforms make building easy; they also make mistakes easy. Training is the tax you pay for speed. If you're looking for expert guidance on implementation, feel free to Contact Us for comprehensive support and proven methodologies.

From SEO to AEO: AI Content Marketing that actually attracts humans

Search is changing. People still type keywords, sure, but they also ask messy questions and expect a single, confident answer. That's where AEO—answer engine optimization—shows up. If you're leaning on AI Content Marketing to scale, you need to aim for both SEO and AEO. Think "findable pages" and "authoritative answers" that feed answer surfaces. Call it SEO - AEO alignment if you like.

Here's the trap: auto‑spinning articles and calling it a strategy. Feeds get full, results get thin, and your brand voice vanishes under the noise. A better play couples retrieval with editorial rigor. Build topic maps, cite real data, and structure content so machines can parse it and humans actually want to read it.

- Map entities and questions, not just keywords. People ask; agents answer.

- Use retrieval: ground every claim in your own docs, numbers, and case notes.

- Add structured signals—FAQ blocks, clean headings, tight summaries—to feed answer engines.

- Embed brand voice rules in prompts so tone stays consistent across drafts.

- Measure with human‑scored quality rubrics, not only traffic. Did readers act?

- Refresh quarterly; stale pages rarely win an answer box or an AI overview.

Teams often pair AI drafting with human refinement: agents assemble the facts, editors shape narrative and nuance, and a QA checklist catches hallucinations. That's the balance. Scale without losing the plot. It's how AI Content Marketing stops being busywork and starts lifting pipeline.

Final thought. You don't need a lab to get this right. You need clarity, a willingness to kill bad ideas quickly, and the discipline to manage your automations like you manage people—expectations, feedback, accountability. Do that, and the stats start tilting your way. Skip it, and the setbacks Deloitte flagged won't feel like numbers. They'll feel like your week. For more insights and expert guidance on avoiding these pitfalls, visit our HOME page to explore comprehensive AI automation solutions.